There is a fantastic audit of Oregon’s attempt to implement the Affordable Care Act (ACA) (“Obamacare”). Highlights include:

- Oregon combined ACA implementation with a complex project that determines if people eligible for healthcare subsidies are also eligible for other government programs. To state the obvious: you NEVER combine a high risk project with another high risk project.

- Oregon chose Oracle as the software provider and paid them on a time and materials basis. Contractors are usually paid on a deliverable or milestone basis so the risk is shared.

- Oregon paid Oracle through a contract vehicle called the Dell Price Agreement. The name alone indicates this may not be the proper way to contract with Oracle. In total, Oregon signed 43 purchase orders worth $132M to Oracle.

- Oregon did not hire a systems integrator, a common practice in these types of projects.

- Oregon hired an independent QA contractor, Maximus. Maximus raised numerous concerns throughout the project. They never rated the project anything but High Risk.

- Lack of scope control, a delay in requirements definition, and unrealistic delivery expectations were cited as main issues.

- As late as two weeks before the October 1 launch, the Government PM was positive about the project: “Bottom Line: We are on track to launch.”

Who Keeps Moving the Goal Post?

One interesting point in the audit is the difficulty in accurately estimating how much effort it takes to do software development. As they were progressing, they were developing estimate-to-completion figures. As shown in the chart below, the more work they did, the higher the estimate-to-completion got. As they got closer to the end, the end got farther away. D’oh! In general, this shouldn’t happen. The more “actuals” you have, the better your estimates should be.

Who Knew this Project Would Fail? These Guys Did.

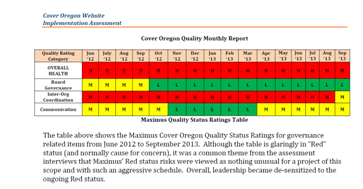

The audit quotes the QA contractor, Maximus, throughout the report. They appeared to have a good grasp on what was happening, pointing out risks throughout the project. A summary of their risk assessments is shown below. The top row, which is Overall Health, is red (high risk) from Day 1. Unfortunately, the report says the project team became “de-sensitized” by the risk reports since they were always bad. Perhpas Maximus should have started with a green (low risk) status.

If One Oversight Group is Good, More Must Be Better

There were several agencies that had oversight responsibilities. Naturally this caused confusion and blurred lines of responsibility. A common refrain was the lack of a single point-of-authority. The audit doesn’t make a recommendation but I will: Oversight should be the responsibility of a single group comprised of all required stakeholders. I have seen many public sector projects that believe additional levels of oversight are helpful. It is not. They serve to absolve members of responsibility. They can always say they thought someone else was responsible for whatever went wrong. If there is only one oversight group, then they can’t point fingers anywhere else and they are more likely to do their job well.

Like Hitting a Bullet with Another Bullet

As noted above, Oregon combined ACA implementation with a complex project that determines if people eligible for healthcare subsidies are also eligible for other government programs.

The Standish Group produces a famous report (the CHAOS Report) that documents project success. The single most significant factor in project success is size. Small projects, defined as needing less than $1M in people cost, are successful 80% of the time while large projects, over $10M, are successful only 10% of the time. (Success is defined as meeting scope, schedule and budget goals.)

So Oregon decided to combine the high risk Obamacare website project, with a projected success rate of 10%, with another high risk project with success rate of 10%. That’s like hitting a bullet with another bullet.

Everything was On Track, Until It Wasn’t

As late as two weeks before launch, the Government PM reported that the launch was on track. The audit notes the system failed a systems test three days before launch and the launch was delayed the day before launch. (It appears the system test conducted three days before launch was the first system test performed.) Even while announcing the delay, Oregon said the site would launch in two weeks (mid-October). By November, the launch consisted of a fillable PDF form that was manually processed. The site had yet to launch six months later (March 2014).

There is a common story told in project management: “how did the project get to be a year late!” “One day at a time.” By April before the October launch, the project was months behind. As the spring and summer progressed, the project fell further behind. And yet, the PM continued to believe they would “catch up” and finish on time. I don’t know if its ignorance or malfeasance at work here. But it is virtually impossible to “catch up.”

One funny (in a sad way) part. The development effort was planned for 17 iterations. So what happened when they completed iteration 17 and still weren’t done? Iteration 17a, 17b, and 17c. Ugh. Also, the use of the word iteration implies an Agile methodology but this isn’t indicated in the audit. It wouldn’t surprise me that Agile was abused misused used on this project.

The audit has many lessons learned. I encourage you to read it all, especially if you are undertaking a large system implementation in the public sector.