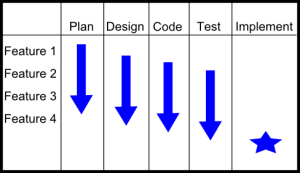

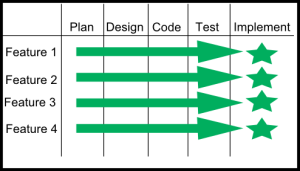

I describe the difference between the traditional waterfall methodology with an Agile methodology as the difference of going vertical vs. going horizontal. You can see this in the difference between the two pictures below.

The first picture represents the typical waterfall project schedule. You essentially do all the planning, then all the design, then all the coding, then all testing and then you implement. This is not exactly right – there is usually overlap between the phases (hence waterfall) but this is more or less accurate. I call this “going vertical.”

This next picture is how the same project would be done using an Agile methodology. You would take Feature 1 and plan, design, code, test, and, maybe, implement before moving to Feature 2. I call this “going horizontal.”

It’s easy to see the advantages of each technique. Agile (going horizontal) enables you to go through all phases early in a project. You discover coding or testing issues on the first go-around so you can improve each iteration. But the downside with Agile is you may discover something while designing Feature 4 that forces you to re-write early functionality. So you increase the probability of re-work but you lower risk of discovering issues late in the schedule..

In the traditional waterfall methodology (“going vertical”), you rarely have to re-design something because you have taken everything into account during the (one) design phase. Of course the issue with waterfall is you don’t discover coding or testing problems until late in the project schedule – maybe too late.

When appropriate, I use Agile since it lowers the risk of a project. However, Agile is not appropriate for many types of projects or in some companies and the majority of projects are still managed using a waterfall methodology.